Floating-point representation is an alternative technique based on scientific notation.

Though we’d like to use scientific notation, we’ll base our scientific notation on powers of 2, not powers of 10, because we’re working with computers that prefer binary.

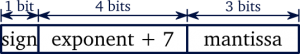

Once we have a number in binary scientific notation, we still must have a technique for mapping that into a set of bits:

We use the first bit to represent the sign (1 for negative, 0 for positive), the next four bits for the sum of 7 and the actual exponent (we add 7 to allow for negative exponents), and the last three bits for the mantissa’s fractional part. Note that we omit the integer part of the mantissa: Since the mantissa must have exactly one nonzero bit to the left of its decimal point, and the only nonzero bit is 1, we know that the bit to the left of the decimal point must be a 1. There’s no point in wasting space in inserting this 1 into our bit pattern, so we include only the bits of the mantissa to the right of the decimal point.

We call this floating-point representation because the values of the mantissa bits “float” along with the decimal point, based on the exponent’s given value. This is in contrast to fixed-point representation, where the decimal point is always in the same place among the bits given.

Approximation

Squeezing infinitely many real numbers into a finite number of bits requires an approximate representation. Although there are infinitely many integers, in most programs the result of integer computations can be stored in 32 bits. In contrast, given any fixed number of bits, most calculations with real numbers will produce quantities that cannot be exactly represented using that many bits. Therefore the result of a floating-point calculation must often be rounded to fit back into its finite representation. This rounding error is the characteristic feature of floating-point computation.

Comparison

Due to rounding errors, most floating-point numbers end up being slightly imprecise. As long as this imprecision stays small, it can usually be ignored. However, it also means that numbers expected to be equal (e.g. when calculating the same result through different correct methods) often differ slightly, and a simple equality test fails. For example:

float a = 0.15 + 0.15

float b = 0.1 + 0.2

if(a == b) // can be false!

if(a >= b) // can also be false!

The solution is to check not whether the numbers are the same, but whether their difference is very small. The error margin that the difference is compared to is often called epsilon.

Rounding

Because floating-point numbers have a limited number of digits, they cannot represent all real numbers accurately: when there are more digits than the format allows, the leftover ones are omitted – the number is rounded. There are three reasons why this can be necessary:

- Too many significant digits – The great advantage of floating-point is that leading and trailing zeroes (within the range provided by the exponent) don’t need to be stored. But if without those, there are still more digits than the significand can store, rounding becomes necessary. In other words, if your number simply requires more precision than the format can provide, you’ll have to sacrifice some of it, which is no big surprise. For example, with a floating-point format that has 3 digits in the significand, 1000 does not require rounding, and neither does 10000 or 1110 – but 1001 will have to be rounded. With a large number of significant digits available in typical floating-point formats, this may seem to be a rarely encountered problem, but if you perform a sequence of calculations, especially multiplication and division, you can very quickly reach this point.

- Periodical digits – Any (irreducible) fraction where the denominator has a prime factor that does not occur in the base requires an infinite number of digits that repeat periodically after a certain point, and this can already happen for very simple fractions. For example, in decimal 1/4, 3/5 and 8/20 are finite, because 2 and 5 are the prime factors of 10. But 1/3 is not finite, nor is 2/3 or 1/7 or 5/6 because 3 and 7 are not factors of 10. Fractions with a prime factor of 5 in the denominator can be finite in base 10, but not in base 2 – the biggest source of confusion for most novice users of floating-point numbers.

- Non-rational numbers – Non-rational numbers cannot be represented as a regular fraction at all, and in positional notation (no matter what base) they require an infinite number of non-recurring digits.

References

Floating-point representation (cburch.com)

What Every Computer Scientist Should Know About Floating-Point Arithmetic (oracle.com)

Leave a Reply

Want to join the discussion?Feel free to contribute!